The problem with averages

Kelly Criterion and Portfolio Allocation

Grab a cup of coffee for this one. It’s one of our most in-depth reports.

Let’s start with a simple game.

You start with $100 and play a coin toss game. If it’s heads, you win 50% of your bet amount; if it’s tails, you lose 40%. To show you how it works

The first toss is heads — You have $150 ($100 + $50 gain)

Second is also heads — You have $225 ($150 + $ 75 gain)

Third toss is tails — You have $135 ($225 - $90 loss)

And so on….

We would have, as the expected value of each toss is positive. For the first toss, you have a 50% chance of winning $50 (50% gain on heads) and a 50% chance of losing $40 (40% loss of tails) => bringing the expected value to $51. So intuitively, the more you toss, the more chances of making money.

If you write a program, simulate this experiment across a million participants (1 toss per minute), and average their results, you will see a favorable trend.

This is precisely what our intuition would also tell us. We are tossing a coin, and there is a 50/50 chance of either losing 40% or winning 50%; we should be gaining on average.

But, the problem with finding the average is that your outcome might be very different from the average outcome. You can only play the game once — just because the average is good doesn’t imply a great outcome for you.

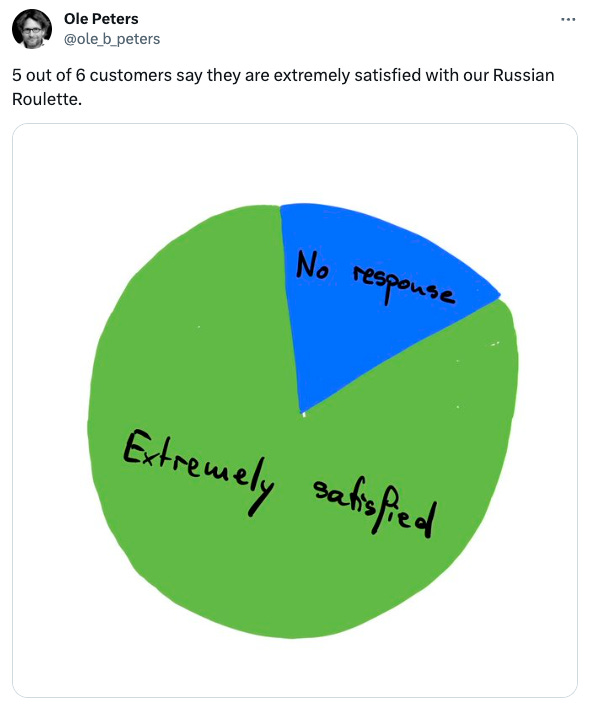

As Dr. Ole Peters puts it, it would be akin to taking the customer survey for a game of Russian Roulette and concluding it’s a great game.

To understand what is most likely to happen to you, an individual playing the game, we have to simulate a single person playing the game over a long time. Here, we see a very different trend:

The phenomenon we are experiencing is known as gambler's ruin. The astute among you might have already noticed that a 50% gain versus a 40% loss is not a fair game2. Due to the multiplicative nature of the betting, losses have a more significant impact. If you start with $100 and lose $40 (a 40% loss), you're left with $60. To get back to $100, you must win not 40% but approximately 67% of your new amount. This asymmetry means that a loss requires a disproportionately larger gain to recover.

Given the equal probability of win/loss, this asymmetry implies that you will always lose long-term. So, why did the portfolio keep increasing in the first simulation of 1 million players?

It was because of the outliers in the simulation.

Consider how the distribution of returns for 1 million players changes across 60 tosses (give it a few seconds to load if you are in your inbox/phone):

Of the 1 million players that start off with $100, after 60 tosses, one-third of the players are left with less than $1. Close to 80% of the players have a lower amount than they started off with. But the wealthiest player now has ~$100M.

Due to these few outliers, the average is close to $2,000 (a cool 20x return on initial investment). But the average has nothing to do with how most players experience the game. This is the ergodicity problem in economics, where the average outcome of the process for a group does not match the average outcome of a single entity.

The stock market is a classic example of a non-ergodic system. Even though the U.S. stock market increased shareholder wealth by $47.4 trillion over the last 100-odd years, only the top 1,000 stocks (~4% of the base of 26,000+ stocks) accounted for all the wealth creation. The most common one-decade buy-and-hold return was -100%3.

Understanding that investing is non-ergodic has significant implications on how we approach investment decisions and asset allocation.

How to win when you have an edge?

In any game where you can lose a fraction of your wealth, if you play indefinitely and your wealth is finite (which it is), the probability of eventual ruin is high — You will always run into a streak of bad luck eventually (as we saw from our individual return simulation).

But this will only apply if you are betting your whole portfolio every time. A better way would be to conserve your portfolio and bet a fraction of your assets each turn. In the simulation we created, if we just change the bet amount to 20% of the portfolio instead of 100%, we see an interesting result.

By making this simple change, instead of ending with zero, we now end up with hundreds of thousands of dollars4. This optimization was initially found by John Kelly, who developed the Kelly Criterion to help with long-distance telephone signal noise issues. But, an unexpected benefit of the system was that it enables us to maximize how fast our portfolio grows while minimizing the risk of losing everything.

Using the Kelly criterion5, we avoid betting too much (which could lead to bankruptcy with a string of bad outcomes) and betting too little (which would not capitalize on our edge). While the principle is intuitive, investors rarely utilize this in their portfolios.

Using Kelly Criterion to invest

In practice, the stock market does not give us fixed odds to work with. A simplified way to invest with the Kelly system is to invest more when the market is undervalued and invest less when the market is overvalued.